In recent years, autonomous weapon systems—often dubbed “killer robots”—have shifted from the realm of science fiction into stark reality. These machines, capable of selecting and engaging targets without human intervention, are raising urgent ethical questions that cut to the core of modern warfare. As technology rapidly advances, so does the debate over whether delegating life-and-death decisions to algorithms is a step too far. This article dives into the complex ethics surrounding autonomous weapons, exploring the concerns of human rights advocates, military strategists, and technologists alike, and asking: where should we draw the line?

Table of Contents

- Understanding the Moral Implications of Autonomous Warfare

- The Role of International Law in Governing Lethal AI

- Balancing Military Advantage with Accountability and Human Rights

- Policy Recommendations for Ethical Development and Deployment

- In Conclusion

Understanding the Moral Implications of Autonomous Warfare

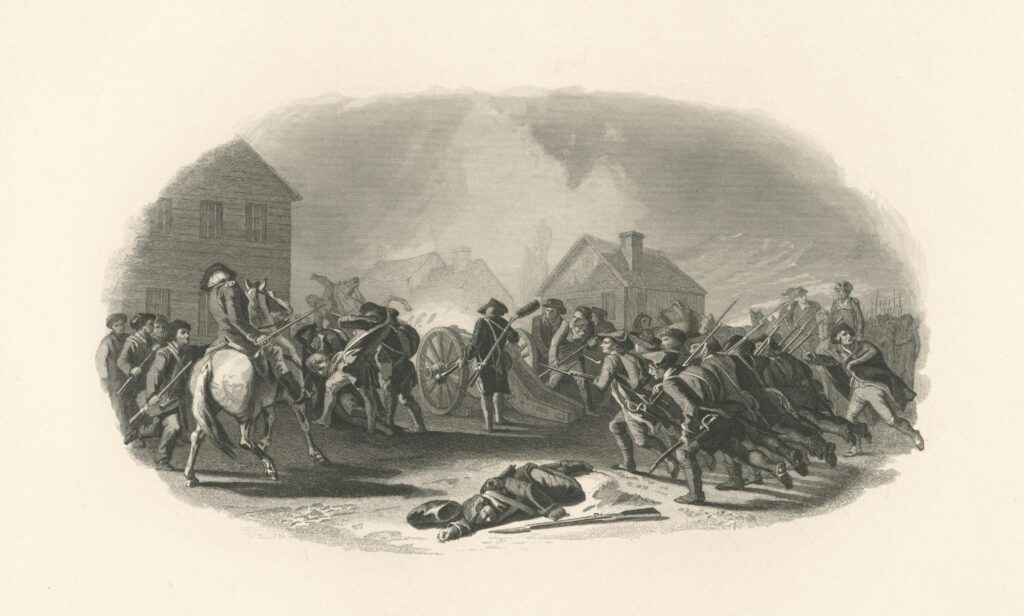

As autonomous weapon systems advance, they challenge traditional ethical frameworks by delegating life-and-death decisions to machines. This shift raises profound questions about accountability and the sanctity of human judgment in warfare. Can a machine truly interpret the complexities of a combat environment, or does this delegation risk unintended consequences? The possibility of errors, malfunctions, or even hacking introduces a new realm of moral hazards where human control is limited or entirely absent. Critics argue that removing human discretion from the battlefield erodes the ethical restraints that govern armed conflict, potentially leading to indiscriminate violence or violations of international humanitarian law.

Supporters, however, highlight potential benefits, such as increased precision and reduced soldier casualties, suggesting that autonomous weapons could minimize unnecessary suffering if programmed with strict ethical guidelines. Yet, this utopian vision hinges on assumptions about the machine’s ability to apply ethical principles—a monumental challenge given the ambiguity and context-dependence of human morality. Key concerns include:

- Responsibility: Who is held liable when autonomous systems malfunction or commit war crimes—manufacturers, programmers, military commanders, or the machines themselves?

- Discrimination: Can autonomous weapons reliably distinguish between combatants and civilians in complex environments?

- Loss of Human Empathy: Will removing humans from the immediate decision loop desensitize warfare, lowering the threshold for armed conflict?

These dilemmas underscore the urgent need for global dialogue and regulation to ensure that technological advancements do not outpace our moral compass.

The Role of International Law in Governing Lethal AI

International law serves as a critical framework for addressing the complexities surrounding autonomous lethal systems. At its core, existing treaties such as the Geneva Conventions offer foundational principles aimed at protecting civilians and restricting unnecessary suffering during conflicts. However, these conventions were drafted long before the emergence of artificial intelligence, posing challenges when applied to autonomous weapon systems. The ambiguity over accountability—whether it lies with the programmer, manufacturer, or operator—complicates enforcement and legal recourse. This is why many experts advocate for updating or creating new international agreements specific to AI-driven weapons, emphasizing transparency and stringent compliance mechanisms.

Several key legal principles currently guide the regulation of lethal AI innovations:

- Distinction: Ensuring AI can reliably differentiate between combatants and non-combatants.

- Proportionality: Preventing excessive or indiscriminate force in automated targeting.

- Accountability: Clarifying who is legally responsible for the AI’s decisions and outcomes.

- Human Oversight: Maintaining an essential role for human judgment in decisions related to life and death.

Without proper international legal standards, there is a risk of “an AI arms race” that undermines global security and ethical warfare. Ongoing diplomatic efforts and expert dialogues hold promise for crafting robust governance models that reconcile innovation with humanitarian values.

Balancing Military Advantage with Accountability and Human Rights

The integration of autonomous weapon systems into military operations raises complex questions about maintaining a strategic edge while upholding core ethical principles. These technologies promise enhanced precision and reduced human risk on the battlefield, but the delegation of life-and-death decisions to machines introduces unprecedented challenges. Critics argue that without stringent oversight, the potential for unintended collateral damage grows, undermining established norms of warfare and eroding trust in military institutions. Balancing innovation and ethical responsibility demands rigorous frameworks that ensure these systems are not only effective but also transparent and accountable.

To address these concerns, governments and defense organizations must prioritize:

- Clear accountability structures: Defining who bears responsibility for autonomous system actions, from developers to commanding officers.

- Robust ethical guidelines: Implementing international standards that govern the deployment and use of autonomous weapons in conflict zones.

- Human-in-the-loop mechanisms: Ensuring meaningful human control is maintained to prevent automated decisions without human judgement.

- Regular audits and transparency reports: Providing public and institutional scrutiny to build trust and reduce misuse.

Only by embedding these principles can militaries harness the advantages of autonomous systems without compromising human rights or accountability on the global stage.

Policy Recommendations for Ethical Development and Deployment

To navigate the complex ethical landscape of autonomous weapon systems, stakeholders must prioritize transparency and accountability. Establishing international frameworks that mandate clear guidelines on the permissible use of these technologies can help prevent misuse and unintended consequences. Robust oversight mechanisms should be put in place, ensuring that autonomous systems remain under meaningful human control and that developers are held responsible for ethical breaches. It’s also crucial to invest in interdisciplinary research that combines ethics, engineering, and law to create well-rounded policies that reflect the diverse concerns of global communities.

Furthermore, fostering global collaboration is essential to harmonize regulations and mitigate arms races fueled by autonomous weapon capabilities. Policymakers should engage not only with military and technological experts but also with civil society representatives and ethicists, promoting inclusive dialogue. Key recommendations include:

- Implementing binding international treaties to prohibit fully autonomous lethal weaponization.

- Mandating human-in-the-loop or human-on-the-loop controls to uphold accountability in deployment.

- Supporting transparency in development to facilitate public trust and informed consent.

- Encouraging ethical training programs for engineers and military personnel working with these systems.

In Conclusion

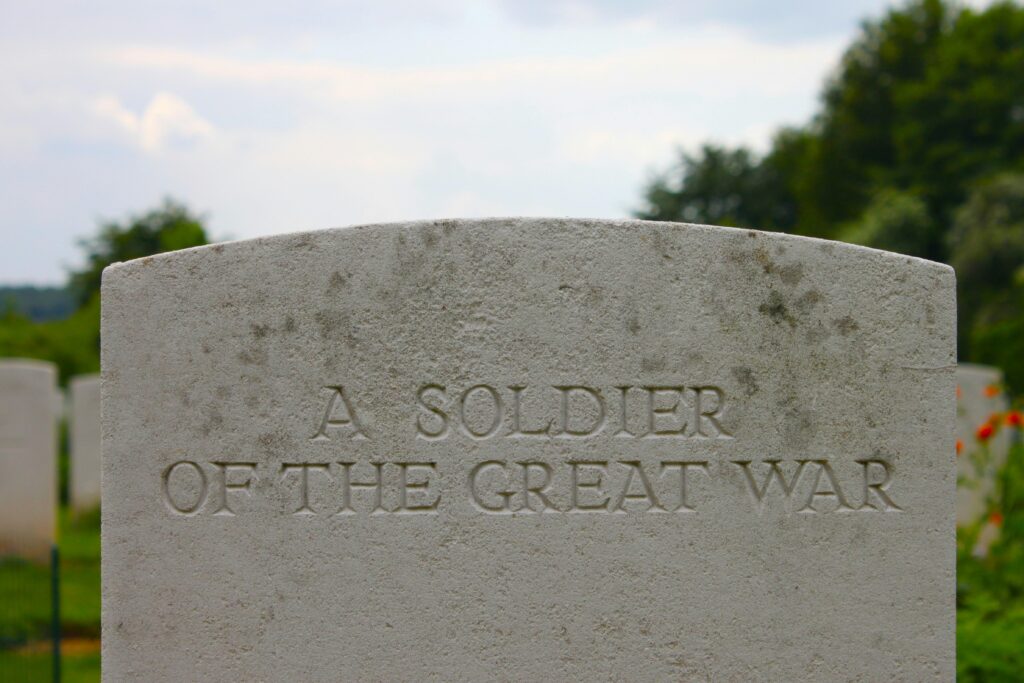

As autonomous weapon systems continue to advance at a rapid pace, the ethical questions they raise become ever more urgent. Balancing the potential military advantages against the profound moral and legal implications is a challenge that requires global collaboration and thoughtful regulation. Ultimately, the decisions made today will shape not only the future of warfare but the very principles of accountability and humanity in conflict. Staying informed and engaged in this debate is crucial—as these technologies evolve, so too must our collective commitment to ethical responsibility.